Field Test: Operation Pitchfork

March 24 | 5 Min read

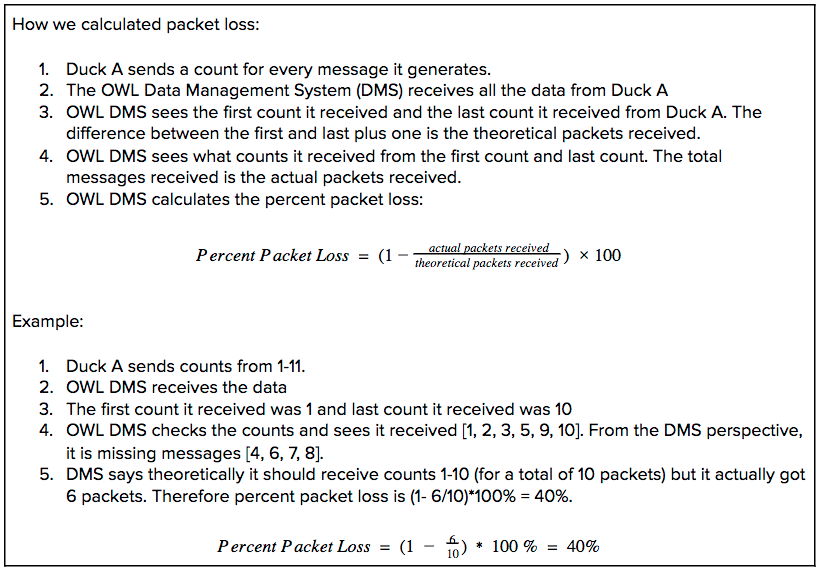

On Sunday March 14, we left Brooklyn heading south to Allentown, PA. Our goal was to test the Version 2 (V2) of the ClusterDuck Protocol (CDP) which was released in Jan 2021 on a larger scale. Previously we were limited to testing the V2 in our living rooms (thanks COVID), but now we had the opportunity to do it in a suburban area. The metric we were focusing on this deployment was packet loss: How many packets were not received from the ClusterDuck Network? Below is our analysis from the data collected using V2.

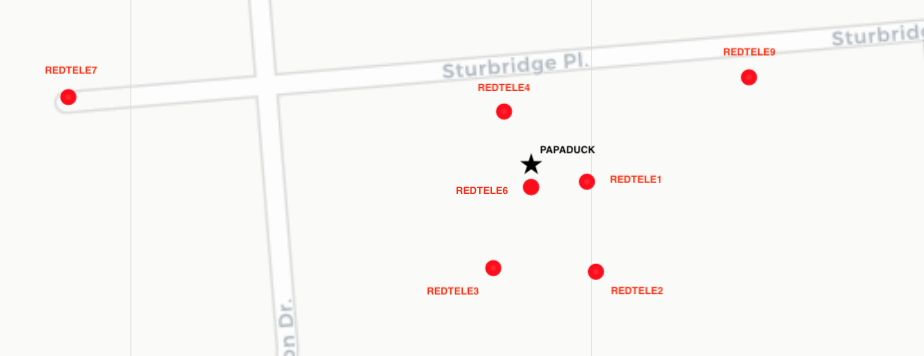

We deployed a total of 8 ducks (7 MamaDucks and 1 PapaDuck). The MamaDucks were made from TTGO T-Beams and the PapaDuck was a Heltec ESP32.

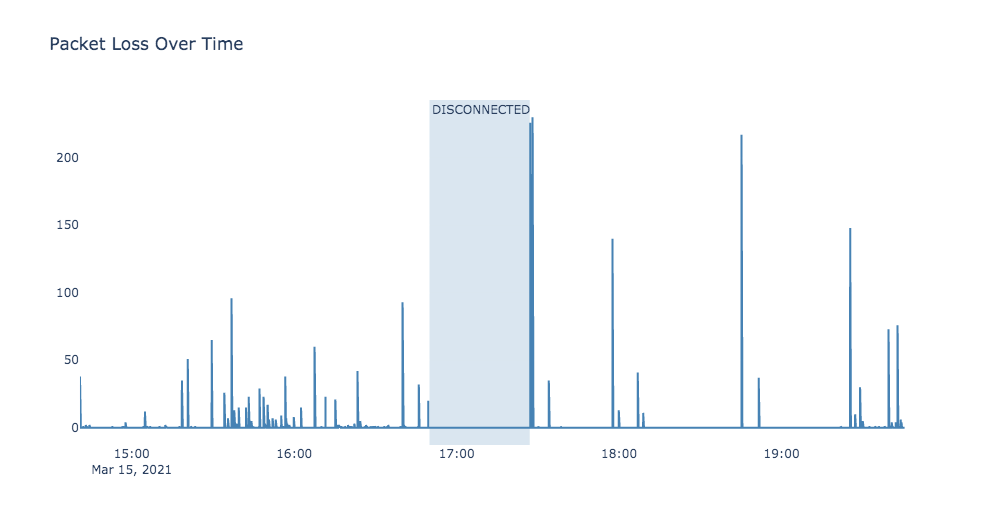

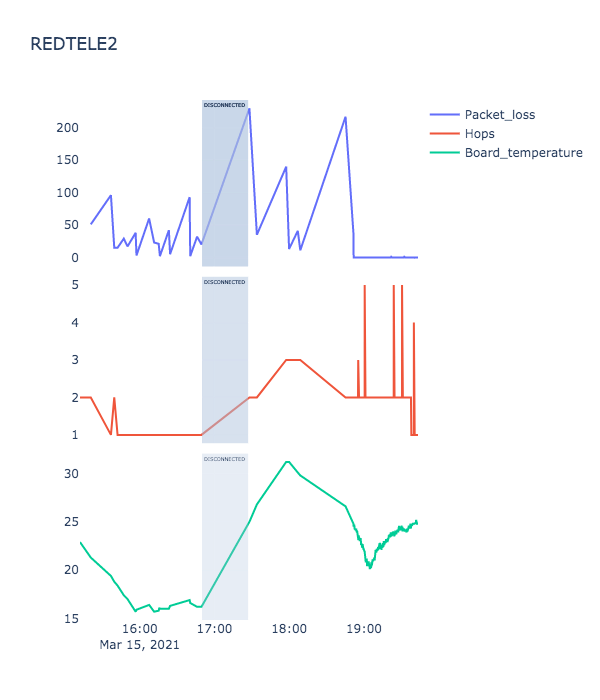

There is a lot of packet loss occurring in the network. Around 17:00, we noticed that the PAPADUCK was not connected to the internet so we had to reboot it. After the reboot, there seems to be less frequent packet loss. This was an interesting find because our initial hypothesis was that packets didn’t reach the PAPADUCK due to the network algorithm.

Since packet loss was less frequent after the PAPADUCK reboot, the question has to be asked: Did we lose packets because of the network or because the PAPADUCK received all the data but had trouble publishing it into the cloud? Currently we do not have a built-in-functionality of the network to answer this question so we need to go back to the drawing board and build it. We need the PAPADUCK to acknowledge it received data but is having trouble publishing it to the cloud.

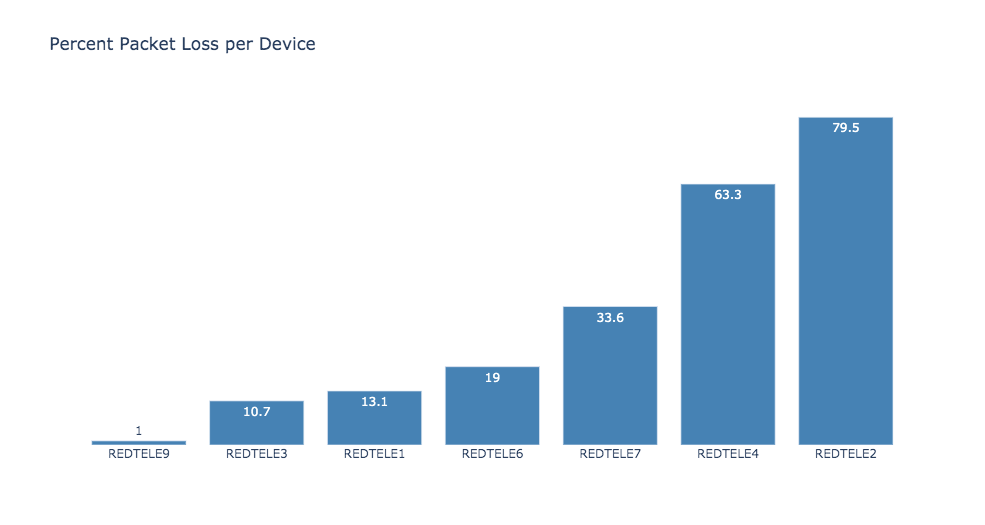

REDTELE2 experienced the most packet loss. Examining REDTELE2 closely, we see that it is losing a lot of packets in the beginning, when the hop count is low. However at the end (around 18:45), when the hop count increased, the packet loss went all the way down. This might be an indication that at the beginning, REDTELE2 faced a lot of data collisions or the surrounding Ducks were busy at the time REDTELE2 was broadcasting. This is just confirming that two-way communication is needed to improve the CDP. With two-way communication, the PapaDuck can send back a confirmation that it received that message. If a duck did not receive it, then it needs to resend the data.

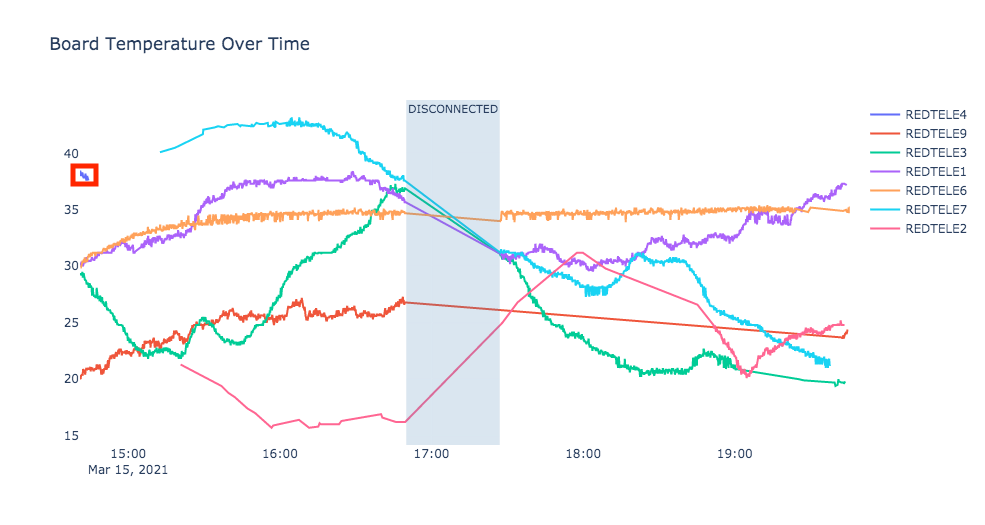

Looking at the board temperatures of the Ducks, two things stick out. REDTELE4 (red box) stopped working after the first 15 minutes. Also REDTELE2 (the duck with the highest packet loss) seems to have the lowest temperature and maintained that right up until the PAPADUCK disconnected.

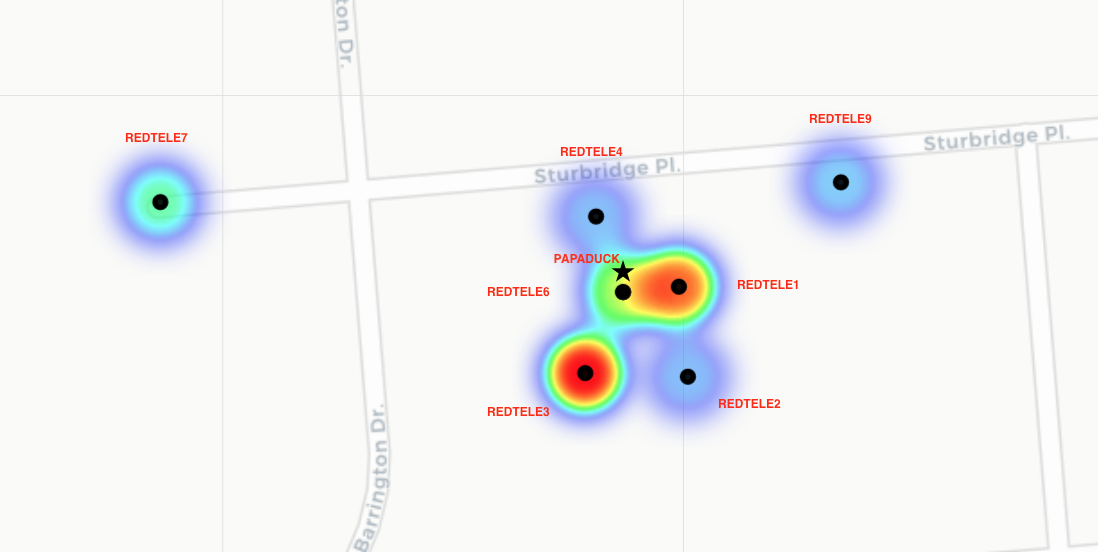

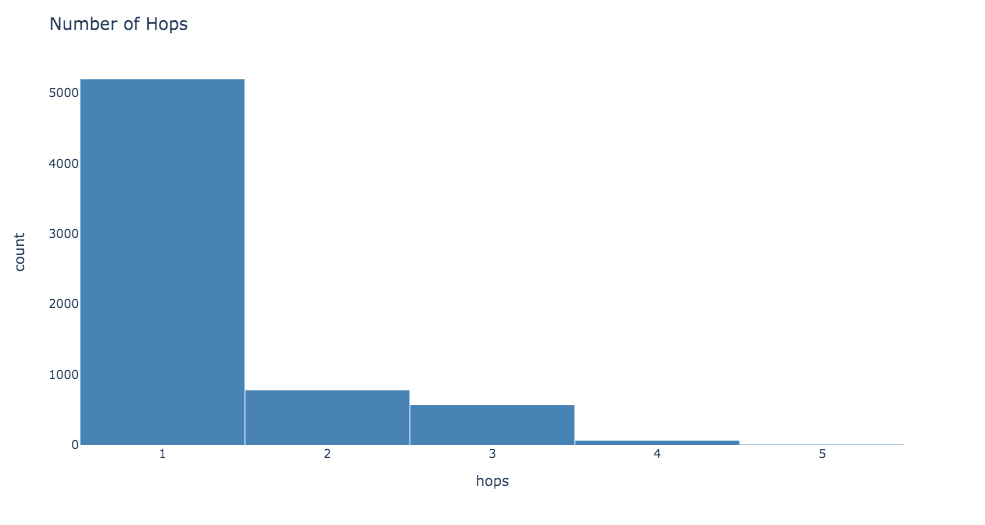

One of the issues that V2 fixed was the path. Before V2, there was no system to direct the packet towards the PapaDuck. The packet was aimlessly traveling from duck to duck hoping to find its way back home. Now with V2, a system was integrated to help guide the packet towards the PapaDuck. The results are phenomenal compared to what it was before.

This heat map shows which ducks have been working overtime. REDTELE3, REDETELE1, and REDTELE6 have received most of the packets. This means not only was it sending its own data, but it also was relaying data from other ducks. This makes sense since these ducks are close to the PapaDuck which can help relay packets from far away ducks.

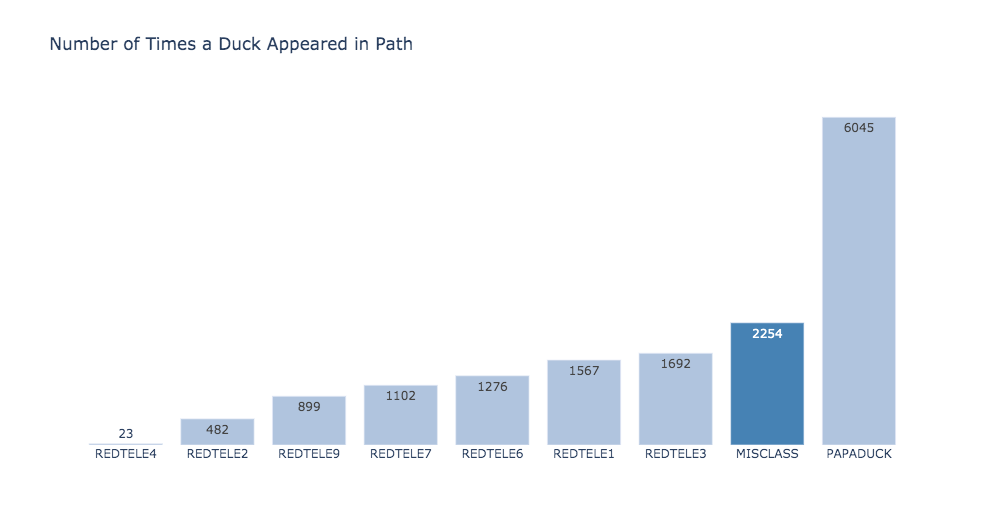

There seems to be an issue with relaying the path. Sometimes the DeviceID is not correctly inputted in the path. Either a character is missing from the beginning or at the end. This error consists of about 14% of mislabeled ids (dark blue bar).

Looking at the hops, we see that the V2 improved the CDP a lot. The new path implementation minimized the number of hops it takes for a packet to get to the PapaDuck.

The next steps to improve the CDP is to create bi-directional communication to acknowledge packet received, PapaDuck indication that it failed to publish packet, and tweak the device id insertion in the path. The ClusterDuck Protocol came a long way with the V2 since our first deployment in a living room in Brooklyn. New challenges continue to rise but with the help of the CDP community we can overcome them to continue connecting the people, places, and things you care about the most.

If you want to learn how to deploy a ClusterDuck Network, have any questions and/or comments, or would like to join our ClusterDuck Protocol community, give us a quack in Slack.